Amongst other things in our lab session this week, we tossed in a few early-days prototypes using various media such as images and audio.

Why do we need additional media?

111 online has an untapped potential to help people understand the questions they’re being asked during a triage by supplementing the text. For example people could:

- hear the sounds of rasping, whistling breath, wheezing, croup

- watch videos of techniques such as the meningitis glass test

- see the varying colours of sick, poo, blood

- see images of broken, dislocated or swollen limbs

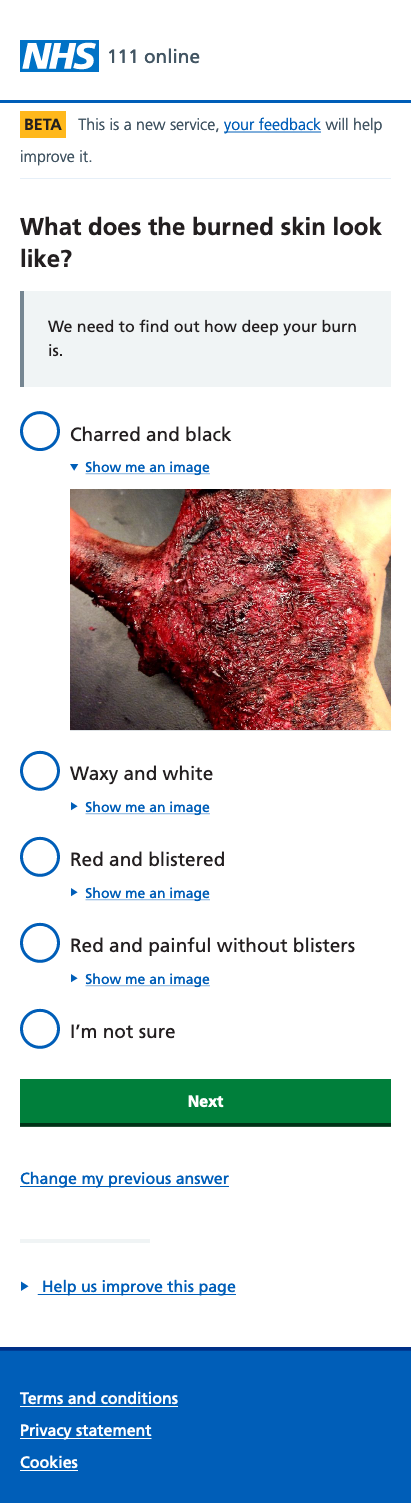

- be shown the varying degrees of burn

Our hypothesis is that if we add media to appropriate questions, people will be more able to answer triage questions with confidence.

Prototyping

Roughly 50 triage questions were identified as being tricky to answer based on text descriptions alone. Many of these questions needed to describe what something might look or sound like.

We took a few of these questions and quickly bashed out some prototypes:

- burns

- “floaters” in the eye

- the meningitis rash (and glass test)

- expiratory wheezing

In the lab

Taking our prototypes into the lab we wanted to find out a few things:

- the effectiveness of additional media in helping a user answer a question

- whether our prototypes were basically usable

- reactions to what some might consider graphic imagery

Some findings

Well, in the lab our prototypes went down well. People felt that the additional media would help to select an answer to the questions posed.

Obviously this is a lab situation, and the attendees aren’t displaying symptoms, and so it’s difficult to discern whether there would be an increase in accurate answers. On the other hand it’s easy to prove the negative — “oh, definitely not that.”

But perhaps people might get the question “wrong” because they interpret a piece of media to mean their symptoms are more or less acute?

The counter to this is, how is this different from the interpretation of language used to write the triage? How can we ever really understand each other with true accuracy? I can’t explain (or understand half the time) Wittgenstein, but this comic puts the idea neatly into context.

Our reckon is that layering of media upon the base text should increase people’s ability to make a choice that’s “right” in their situation.

Gross!

Unsurprisingly the burns imagery caused visible reactions from participants, varying expressions of distaste. One user found it rather hard to look.

But something worth noting is the difference between observation and insight, and the importance of need and context.

Our context was a lab setting. No one was suffering from burns at that moment, so there wasn’t a need to see a gross burns image. In another context (being burnt quite badly), that need then manifests.

On the other hand, during a triage, you may pass through a question like this in order to rule something out. If you can confidently answer “nope, not that” without having to view graphic imagery, perhaps we shouldn’t shove such imagery in your face.

We observed people seeing graphic content and reacting to it in various ways. Everyone understood (and mentioned) that graphic imagery would help them answer the question if they were in such a situation.

Our insight is:

- showing graphic content can be really effective - it’s certainly noticeable

- reactions of distaste aren’t necessarily a reason to reject the idea

- we might need a way to forewarn people when the context is not exact

What next?

One thing we’re going to look at is how we might warn people that something graphic is present. The current interaction “show me…” means that something minimal and very simple can be tried — “… (graphic imagery)”

There’s a bit of thinking to do around loading if we’re really worried about people being squeamish — is there a way of trying to minimise the possibility of a flash of gross images as they load? A bit of looking into render blocking might mean it’s a bit of a non-issue, but we don’t yet know that.

Other challenges are bigger. Where do we source media from? What about cost? What about differing skin tones?

Certainly something to be looking into. These early tryouts show the idea certainly has legs.